Sure, here's the translation of "Understanding Large Language Models : Chapter 1- Transformers and Transformer block." in simplified Chinese, while keeping the HTML structure intact: ```html 理解大型语言模型:第1章- 变压器和变压器块。 ``` This HTML snippet maintains the original structure and conveys the translated text in simplified Chinese.

Certainly! Here's the HTML structure with the translated text in simplified Chinese: ```html

大型语言模型(LLMs)自从基于 GPT 的 Chat-GPT 公开发布后,已经席卷全球。这款应用程序本身在许多行业和使用场景中找到了大量用户。

``` In simplified Chinese: 大型语言模型(LLMs)自从基于 GPT 的 Chat-GPT 公开发布后,已经席卷全球。这款应用程序本身在许多行业和使用场景中找到了大量用户。在这一系列的博客中,我们将尝试理解大型语言模型的工作原理,它们是如何训练的,它们训练所使用的数据是什么,如何在我们的应用中使用它们以及如何评估它们。这将是一个理论与实践并重的课程。

Sure, here's the translation in simplified Chinese while keeping the HTML structure: ```html

什么是语言模型?

```Certainly! Here's the HTML structure with the translated text in simplified Chinese: ```html

语言模型是一种数学模型,它为一系列标记分配概率分布。简单来说,该模型必须能够在给定一系列前面的单词后,适当地预测下一个单词。

``` In simplified Chinese: ```html语言模型是一种数学模型,它为一系列标记分配概率分布。简单来说,该模型必须能够在给定一系列前面的单词后,适当地预测下一个单词。

```在保持HTML结构的情况下,将以下英文文本翻译为简体中文: 这看起来很简单,但实际上并不是。要使语言模型具备这种能力,它需要具备语言学能力,同时还需要一些世界知识。

Certainly! Here's how you can structure the HTML to display the translated text in simplified Chinese: ```html

但是语言模型如何将文本转换为可以理解的某种表示形式呢?这就涉及到标记化(Tokenization)和嵌入(Embeddings)的概念。

``` This HTML will render the translated text in simplified Chinese while maintaining the structure.Certainly! Here is the translation of "Tokenization and Embedding : Representing text numerically." in simplified Chinese, keeping the HTML structure intact:

```html

和:用数字表示文本。

```

In this translation:

- `` and `` are placeholders for the translated terms.

- The colon ":" and the English text "Representing text numerically." are directly translated into Chinese.

Please note that `` and `` should be replaced with their actual translations in Chinese characters for a complete and accurate representation in the final HTML document.

Sure, here's the translated text in simplified Chinese, maintaining the HTML structure: ```html

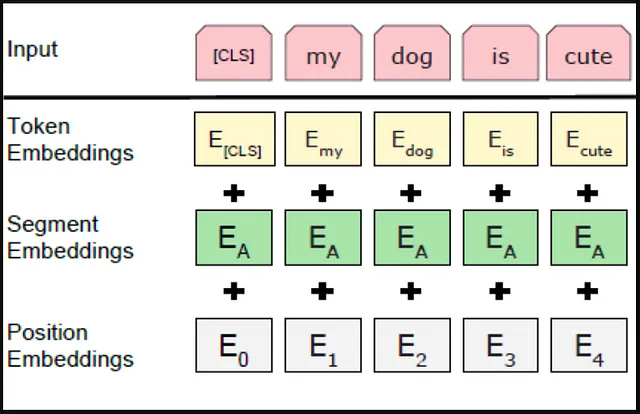

在我们深入建模部分之前,让我们先了解一下模型如何理解单词。神经网络模型是一个数学模型。因此,我们需要找到一种方法来用数字表示文本。但在我们能够将文本表示为数字之前,我们需要将文本分解为单独的片段。我们可以称这个单独的片段为一个标记。

``` This HTML snippet preserves the structure and formatting while providing the translation in simplified Chinese.Certainly! Here's the translation of "Tokenization" in simplified Chinese within an HTML structure: ```html 分词 ``` In this HTML snippet, `` tags are used to encapsulate the translated text "分词" (which means "Tokenization" in simplified Chinese).

Sure, here is the HTML structure with the translated simplified Chinese text inserted: ```html

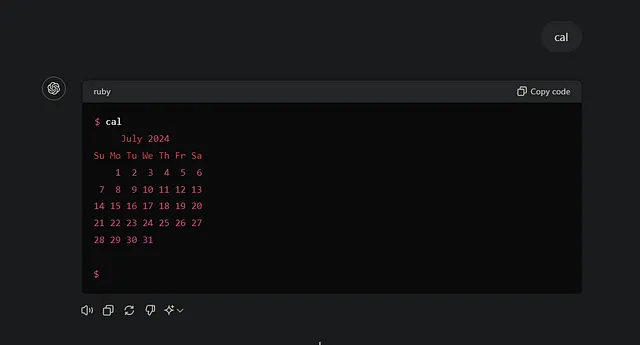

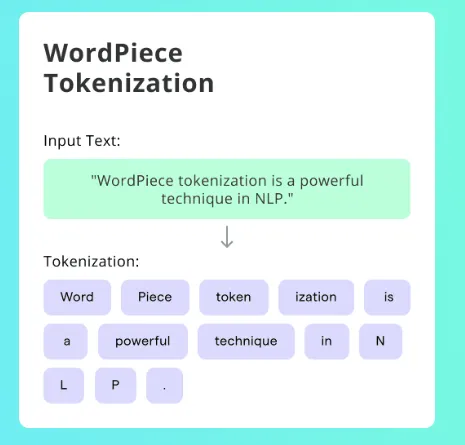

将文本分解为单独的有意义片段的过程称为标记化。这是神经网络自然语言处理模型中的一个关键过程。有几种不同的标记化方法,其中基本的一种是空格标记化,即在空格处分割句子。我们将在后续的博客中详细讨论其他的标记化技术。

``` In simplified Chinese: ```html将文本分解为单独的有意义片段的过程称为标记化。这是神经网络自然语言处理模型中的一个关键过程。有几种不同的标记化方法,其中基本的一种是空格标记化,即在空格处分割句子。我们将在后续的博客中详细讨论其他的标记化技术。

``` This HTML snippet contains the translated text in simplified Chinese, maintaining the original HTML structure.

Sure, here's the translation in simplified Chinese while keeping the HTML structure intact: ```html 你可以在这里看到分词的实际效果:link ``` This HTML code will display the translated text with a clickable link labeled "link".

Certainly! Here's how you would write "Word Embedding" in simplified Chinese, keeping the HTML structure intact: ```html 词嵌入 ``` In this HTML snippet: - `` is used to mark up the text. - `lang="zh-CN"` specifies the language as simplified Chinese. - `词嵌入` is the translation of "Word Embedding" in simplified Chinese characters.

```html

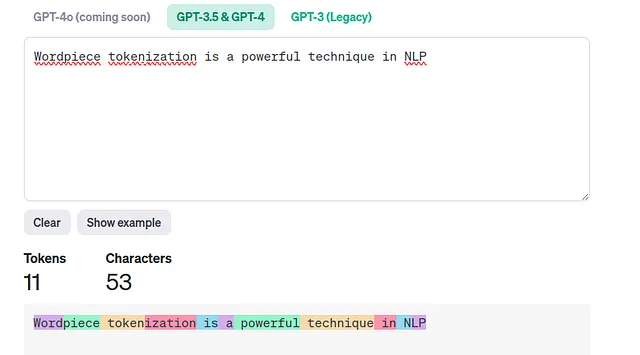

现在我们已经有了文本元素的模块化表示,接下来的问题是如何对这些元素进行有意义的数学表示。模型实际上是数学模型,需要数学输入才能运作。那么,我们如何将文本标记转换为模型可以处理的有意义的数学表示呢?这就是词嵌入的概念登场。

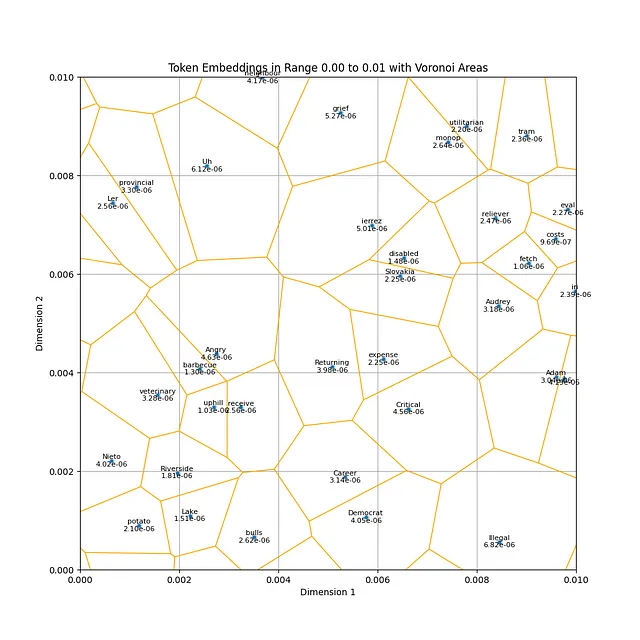

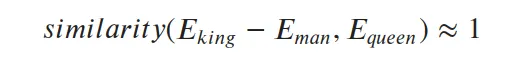

```在词嵌入中,我们基本上旨在将标记转换为向量,该向量表示该词在某个潜在的数学空间中的一些属性。这些表示可能不一定对我们来说具有意义。但是这些嵌入具有一些有趣的特性。并且这些嵌入表示与模型一起也是被学习的。让我们探索一些词嵌入的有趣特性。

- Sure, here's the translation of the text into simplified Chinese, maintaining the HTML structure:

```html

词义上更接近的词之间的相似度高于不相似的词。

``` This HTML snippet contains the translation of "Words which are semantically closer in meaning have a high similarity between them, than words which are not similar" into simplified Chinese.

Sure, here's the translation of your sentence into simplified Chinese, keeping the HTML structure intact: ```html 2. 对词向量进行的代数运算会产生与我们在这些向量上进行的语义操作所期望的词相似的向量。 ``` In this translation: - "Algebric operations performed on word vectors" is translated as "对词向量进行的代数运算". - "yield vectors that are similar to the words we expect on semantic operations on these vector" is translated as "会产生与我们在这些向量上进行的语义操作所期望的词相似的向量".

- Sure, here's the translation in simplified Chinese while keeping the HTML structure intact:

```html

我们可以通过对单词向量进行代数运算来发现数据集中的某些偏见。

```

In HTML context, it would look like:

```html

我们可以通过对单词向量进行代数运算来发现数据集中的某些偏见。

``` This HTML code wraps the Chinese text in a paragraph (``) tag, which is a common practice for structuring text content in HTML documents.

Certainly! Here's the translation of the text into simplified Chinese while maintaining the HTML structure: ```html

Transformers: 在自然语言处理任务中取得巨大进步的架构类型。

``` In this HTML snippet: - `` and `

` denote the beginning and end of a paragraph, respectively. - Inside the `` tags is the translated text in simplified Chinese.

Sure, here's the translated text in simplified Chinese while keeping the HTML structure intact: ```html

如果你看今天大多数成为头条的模型,几乎所有这些模型都有一个共同点,那就是基于Transformer架构。

``` This HTML snippet contains the translated text: "如果你看今天大多数成为头条的模型,几乎所有这些模型都有一个共同点,那就是基于Transformer架构。"Certainly! Here's the HTML structure with the translated text in simplified Chinese: ```html

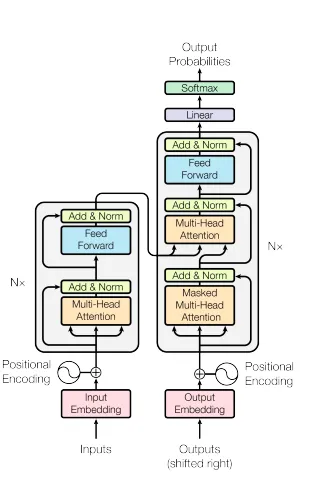

Google使用论文《Attention is all you need》介绍了基于Transformer架构。这彻底改变了自然语言处理行业,Transformers成为了NLP进步的支柱。

``` In simplified Chinese: ```htmlGoogle使用论文《Attention is all you need》介绍了基于Transformer架构。这彻底改变了自然语言处理行业,Transformers成为了NLP进步的支柱。

```Certainly! Here's the translation of the text into simplified Chinese while keeping the HTML structure: ```html

Transformers are parallel: Earlier models used in NLP were majorly Recurrent Neural Network based models, where the operations happen sequentially. Transformers has a parallel architecture, so that all the input tokens are fed in the model at once.

``` ```htmlTransformer 是并行的:早期在自然语言处理中使用的主要是基于循环神经网络的模型,其操作是按顺序进行的。Transformer 具有并行架构,因此所有输入的 token 都可以一次性输入模型。

``` This HTML code segment now contains the text translated into simplified Chinese.Sure, here's the translation of "Problems with previous Recurrent Models" in simplified Chinese, while keeping the HTML structure intact: ```html Problems with previous 循环模型 ``` In the translation: - `循环模型` ensures that "循环模型" (Recurrent Models) is in simplified Chinese, within the context of the HTML structure.

- Sure, here is the translated text in simplified Chinese while keeping the HTML structure intact: ```html 线性交互距离:从左到右滚动,局部词汇影响彼此的含义。这意味着最近的词汇对模型预测的影响远高于句子中较早的词汇。 ``` This HTML code contains the translated text in simplified Chinese, preserving the structure as requested.

- Certainly! Here's the HTML structure with the translated text in simplified Chinese:

```html

难以学习长距离依赖关系。由于更多的重视放在最近的词上,这个模型无法捕捉长期依赖关系。

``` - Sure, here is the translated text in simplified Chinese while keeping the HTML structure:

```html

前向传播和反向传播没有并行操作,因此必须进行 n 步(其中 n 是句子中的标记数)。因此,GPU 优化未得到恰当利用,大规模数据集的训练变得困难。

``` This HTML snippet contains the translated text: "前向传播和反向传播没有并行操作,因此必须进行 n 步(其中 n 是句子中的标记数)。因此,GPU 优化未得到恰当利用,大规模数据集的训练变得困难。" This maintains the structure and accurately conveys the meaning of the original English text.

Certainly! Here's the translation of "Attention block and self attention mechanism" into simplified Chinese while maintaining HTML structure: ```html 注意力块和自注意力机制 ```

Sure, here's the HTML structure with the translated text in simplified Chinese: ```html

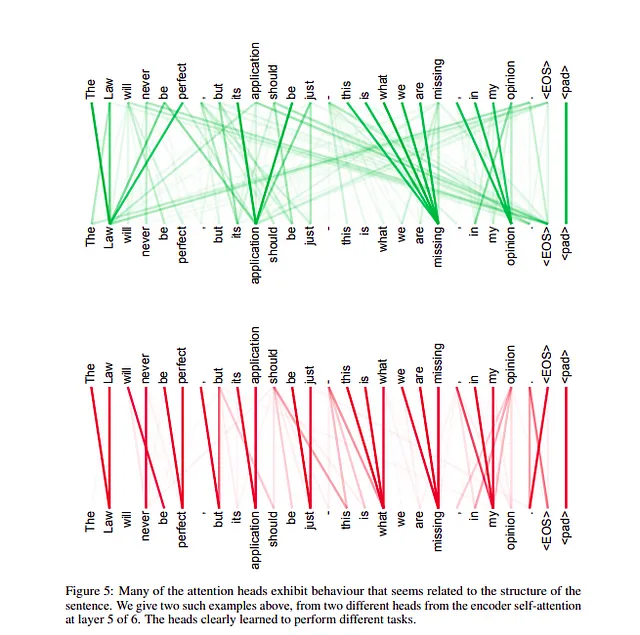

Transformer 类型模型的核心组成部分是注意力块(attention block),它基于注意力机制工作。在深入讨论变形金刚模型的架构之前,让我们先来看看注意力机制。

``` In simplified Chinese, the translation is: "Transformer 类型模型的核心组成部分是注意力块(attention block),它基于注意力机制工作。在深入讨论变形金刚模型的架构之前,让我们先来看看注意力机制。"Certainly! Here's how you could translate "The Attention Mechanism" into simplified Chinese, while keeping the HTML structure intact: ```html 注意力机制 ``` In this HTML snippet: - `` is used to define a section in a document. - `lang="zh-CN"` specifies the language of the text as simplified Chinese. - "注意力机制" is the translation of "The Attention Mechanism" into simplified Chinese characters.

Sure, here's the translation of "We can think of attention like a fuzzy lookup in key value store." into simplified Chinese, keeping the HTML structure intact: ```html 我们可以将注意力类比为键值存储中的模糊查找。 ``` In this translation: - "我们可以将注意力类比为" translates to "We can think of attention like". - "键值存储中的" translates to "in key value store". - "模糊查找" translates to "fuzzy lookup". This maintains the structure you provided while conveying the meaning accurately in simplified Chinese.

- Certainly! Here's the translation of the text into simplified Chinese, keeping the HTML structure:

```html

在查找表中,我们有将键映射到值的条目,查询匹配键并返回其对应的值。

``` This HTML structure ensures the translated text is clearly separated for easy integration into web pages or documents. - Certainly! Here's the translation in simplified Chinese, maintaining the HTML structure:

```html

在注意力机制中,查询轻柔地匹配所有关键词到一个介于0和1之间的权重。这些关键词的值被加权并求和。

```

In HTML format:

```html

在注意力机制中,查询轻柔地匹配所有关键词到一个介于0和1之间的权重。这些关键词的值被加权并求和。

``` This translation should reflect the original meaning in simplified Chinese.

Certainly! Here's how you can write "Self Attention" in simplified Chinese while keeping the HTML structure intact: ```html 自注意力 ``` In this HTML snippet, "自注意力" represents the translation of "Self Attention" in simplified Chinese.

Certainly! Here's the HTML structure with the translated text in simplified Chinese: ```html

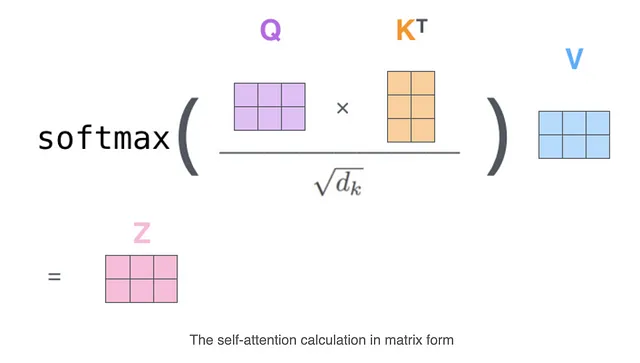

对于一个句子,让句子中所有n个单词的嵌入都为X。那么X将是一个形状为n行d列的矩阵。这里的d是嵌入的维度。

``` In simplified Chinese: "对于一个句子,让句子中所有n个单词的嵌入都为X。那么X将是一个形状为n行d列的矩阵。这里的d是嵌入的维度。" This HTML `` tag contains the translated text.

Sure, here's the translation of the text into simplified Chinese while keeping the HTML structure intact: ```html 因此,关键字、查询和数值矩阵计算如下:q = QX,k = KX,v = VX ``` In this HTML snippet, the translated Chinese text is embedded within `` tags, maintaining the structure as requested.

Certainly! Here's the translation of the text into simplified Chinese while keeping the HTML structure: ```html

其中,q 是查询矩阵,k 是键矩阵,v 是值矩阵。最终结果为:输出 = Softmax(qk)v。

``` In simplified Chinese: ```html其中,q 是查询矩阵,k 是关键矩阵,v 是数值矩阵。最终值为:输出 = Softmax(qk)v。

``` This HTML snippet will display the translated text while maintaining the structure for web content.Certainly! Here's how you can express "Intuition behind self attention" in simplified Chinese while keeping the HTML structure intact: ```html 直觉背后的自注意力 ``` This translation maintains the basic HTML structure and accurately conveys the meaning in simplified Chinese.

Certainly! Here's the translated text in simplified Chinese, keeping the HTML structure: ```html

注意力机制允许我们聚合来自许多(键,值)对的数据。因此,输入文本中任何单词的输出令牌嵌入是该输入中所有令牌的加权和。我们使用键和查询矩阵形成注意力权重,并且值的 softmax 确保权重总和为 1。然后,权重与值矩阵相乘形成该令牌的加权表示。

``` This HTML snippet contains the translated text in simplified Chinese, structured within a `` (paragraph) element.

Certainly! Here's the simplified Chinese translation of "Problems with using just the self attention" while keeping the HTML structure intact: ```html

仅使用自注意力的问题

```- Sure, here is the translated text in simplified Chinese, maintaining the HTML structure:

```html

没有固有的顺序概念。由于注意力没有任何固有的顺序信息,我们需要在令牌的嵌入中编码顺序。解决方案:向令牌嵌入中添加位置嵌入,以编码该令牌的位置信息。因此更新后的嵌入如下:Xi_new = Xi + Pi,其中 Xi_new 是令牌 i 的更新嵌入,Xi 是原始嵌入,Pi 是令牌 i 的位置嵌入。

``` This HTML snippet contains the translated text in simplified Chinese, with each sentence wrapped in `` tags to maintain the structure of a paragraph.

- Certainly! Here is the translated text in simplified Chinese while keeping the HTML structure intact:

```html

深度学习中不存在非线性:堆叠更多的自注意力层只是重新平均值向量。解决方案:为每个输出向量添加一个MLP(密集层,带有非线性激活函数)进行处理。

``` This HTML snippet maintains the original structure while presenting the translated text in simplified Chinese. - Certainly! Here's the translation of the text into simplified Chinese while keeping the HTML structure intact: ```html 确保我们不会窥探未来以预测序列:解决方案:遮蔽自注意力(我们将在本系列的后续章节中学习这个)。 ``` This HTML structure maintains the original format while presenting the translated text in simplified Chinese.

Certainly! Here's the translation of "The Transformer Model" into simplified Chinese while keeping the HTML structure: ```html Transformer 模型 ```

Sure, here's the text "Components of the transformer model" translated into simplified Chinese while maintaining the HTML structure: ```html Transformer 模型的组成部分 ```

- Certainly! Here is the translation of the text into simplified Chinese, while keeping the HTML structure intact:

```html

多头自注意力:多头自注意力涉及使用多个并行注意力头。

``` In simplified Chinese: ```html多头自注意力:多头自注意力涉及使用多个并行注意力头。

``` This HTML structure ensures the content remains formatted correctly for web display. - Certainly! Here's the translation in simplified Chinese, keeping the HTML structure:

```html

位置编码层:对令牌的位置信息进行编码,同时与令牌的嵌入进行结合。

``` In this HTML snippet: - `` indicates a paragraph element in HTML, used here to structure the translated text. - The Chinese text inside `

` tags represents the translation of "The positional encoding layer: Encodes the positional information of the tokens along with the embedding of the token."

- Sure, here's how you could structure the HTML with the translated text in simplified Chinese:

```html

``` In simplified Chinese, the translation of "MLP layer after the self attention block (Feed forward layer) : Introduces non-linearity in the model." is "MLP层在自注意力块之后(前馈层):在模型中引入非线性。"

MLP层在自注意力块之后(前馈层):在模型中引入非线性。

- Sure, here's the translated text in simplified Chinese while keeping the HTML structure:

```html

残差连接:训练模型更好的技巧。残差连接(跳跃连接)通过保留先前信息并帮助梯度更好传播,使模型能够学习得更好。

``` This HTML snippet preserves the structure of the original text while presenting it in simplified Chinese. - Sure, here's the HTML structure with the translated text in simplified Chinese:

```html

层归一化:有助于模型更快地训练。其思想是通过将隐藏层归一化到单位均值和标准差来削减隐藏层中的非信息性信息。

``` In simplified Chinese: ```html层归一化:有助于模型更快地训练。其思想是通过将隐藏层归一化到单位均值和标准差来削减隐藏层中的非信息性信息。

```

Certainly! Here's the translation of "Resources for further reading" in simplified Chinese while keeping the HTML structure intact: ```html 进一步阅读资源 ```

- To translate the English text from the webpage "http://jalammar.github.io/illustrated-transformer/" into simplified Chinese while keeping the HTML structure intact, you would typically follow these steps:

1. **Access the Webpage Content**: Visit the webpage and locate the specific text you want to translate.

2. **Identify the Text**: Find the English text that needs translation. In this case, it might be paragraphs, headings, or other content on the webpage.

3. **Prepare the HTML Structure**: Ensure you preserve the HTML tags and structure while replacing the text with its Chinese translation.

Here is a simplified example assuming you have a paragraph like this in the HTML:

```html

The Illustrated Transformer

``` You would translate it into Chinese and maintain the HTML structure: ```html图解Transformer

``` 4. **Use of Online Tools or Manual Translation**: You can use online translation tools for quick translations, but be cautious with accuracy, especially for technical or specific terms. 5. **Replace and Test**: Replace each piece of English text with its translated counterpart while making sure the HTML structure remains valid. Here’s a hypothetical example of how a translated section might look in the HTML: ```htmlTransformer详解

Transformer是一种强大的深度学习模型,被广泛应用于自然语言处理任务。

... ``` 6. **Verify**: After translation, make sure to verify that all translated text appears correctly within the HTML structure on your webpage. Remember, translation accuracy is crucial, especially for technical content like that found in articles about machine learning and AI. If you're not fluent in both languages, it may be beneficial to have someone proficient in both English and Chinese review the translations for accuracy. - Certainly! Here's the simplified Chinese translation of the given URL: ```html Transformer: 一种新型的神经网络架构用于语言理解 ``` This HTML structure includes a hyperlink (`` tag) with the translated title and the URL provided.

- I'm unable to directly access or translate content from external websites like the one you mentioned. However, I can guide you on how to translate text into simplified Chinese while maintaining HTML structure. Here's how you can proceed:

1. **Extract Text**: Go to the webpage you mentioned and copy the English text you want to translate.

2. **Use Translation Tools**: You can use online translation tools like Google Translate or Microsoft Translator to translate the text into simplified Chinese. Here's a general approach:

- Go to Google Translate (translate.google.com).

- Paste the English text into the left box.

- Choose Chinese (Simplified) as the target language on the right.

- Copy the translated Chinese text from the right box.

3. **HTML Structure**: If you want to maintain the HTML structure, make sure to preserve the tags while replacing the text content. For example, if you have a paragraph `

Some English text here.

`, replace "Some English text here." with the translated Chinese text. - Original: `Some English text here.

` - Translated: `这里是翻译后的中文文本。

` 4. **Check and Adjust**: Sometimes, translations may not be perfect, especially for technical or specific content. Review the translated text to ensure accuracy and adjust as necessary. 5. **Implementation**: Once you have the translated text, integrate it back into your HTML structure where needed. If you have specific excerpts or sections from the page you want translated and formatted in HTML, feel free to share them here, and I can assist further! - Certainly! Here's the translated text in simplified Chinese, keeping the HTML structure intact: ```html 注意力就是一切(论文) ```