Certainly! Here's the translation of "Paper Review: Are LLMs Good at Search?" in simplified Chinese while keeping the HTML structure intact: ```html

论文评审:LLM在搜索中表现如何?

```

Sure, here's the HTML structure with the translated text in simplified Chinese: ```html

这是源自我们内部实验室阅读小组的一系列论文评论文章中的第一篇。在本期中,我们将讨论由作者孙伟伟等撰写的论文:“ChatGPT在搜索方面表现如何?调查大型语言模型作为重新排序代理” ,该论文荣获EMNLP 2023杰出论文奖。[链接到Arxiv][链接到代码]

``` The translated text in simplified Chinese: "这是源自我们内部实验室阅读小组的一系列论文评论文章中的第一篇。在本期中,我们将讨论由作者孙伟伟等撰写的论文:“ChatGPT在搜索方面表现如何?调查大型语言模型作为重新排序代理”,该论文荣获EMNLP 2023杰出论文奖。[链接到Arxiv][链接到代码]" This maintains the HTML structure while providing the translated text in simplified Chinese.Certainly! Here's the translated text in simplified Chinese while maintaining the HTML structure: ```html

tldr: 使用一种称为排列生成的方法,作者们展示了语言模型在重新排列搜索结果方面的出色表现,在零样本设置下甚至超过了其他精调模型。为了克服语言模型推理和令牌成本的问题,他们提出了一种称为排列蒸馏的知识蒸馏方法,用于训练更小、更高效的模型,在仅使用训练数据的子集进行训练后,这些模型表现与现有的精调模型相当。

``` This HTML structure ensures that the translated text is presented clearly in a web-compatible format.To translate "Background and Central Questions" into Simplified Chinese while keeping the HTML structure, you can use the following code:

```html

背景与核心问题

```

Here’s how you can structure it in an HTML document:

```html

Translation

背景与核心问题

```

In this HTML example:

- `背景` translates to **Background**.

- `与` means **and**.

- `核心问题` translates to **Central Questions**.

Feel free to adjust the HTML tags as needed for your specific use case!

Certainly! Here's the HTML structure with the translated text in simplified Chinese: ```html

信息检索(IR)中最成功的方法通常依赖于两阶段过程:

- 检索阶段:搜索引擎根据查询检索出一组候选段落。这可以是密集型(基于嵌入)或稀疏型(基于关键词),或者两者的某种组合。

- 重新排序阶段:重新排序模型对候选段落进行评分和重新排序,以向用户展示最相关的内容。

信息检索(IR)中最成功的方法通常依赖于两阶段过程:

- 检索阶段:搜索引擎根据查询检索出一组候选段落。这可以是密集型(基于嵌入)或稀疏型(基于关键词),或者两者的某种组合。

- 重新排序阶段:重新排序模型对候选段落进行评分和重新排序,以向用户展示最相关的内容。

Sure, here's the translated text in simplified Chinese, keeping the HTML structure: ```html

本文关注重新排序,传统上需要在大型人工标注数据集上对模型进行微调。这种方法的一个缺点是需要大量人力来标注数据,并且在标注数据有限的领域/区域中可能导致泛化能力较弱。因此,越来越多的人开始关注如何利用大语言模型(LLMs)的零-shot推理能力来进行重新排序任务。

``` This HTML snippet contains the translated text in simplified Chinese, structured similarly to the original English text.当然,这里是您要求的将英文文本翻译成简体中文的版本,同时保持 HTML 结构不变: ```html

LLMs 在检索增强生成(RAG)框架中被广泛使用,在内容生成步骤中用于理解检索到的段落并生成连贯的回复/总结。然而,它们在“检索”部分的有效性,即作为重新排序代理的有效性,还没有被彻底研究,因为在这个背景下应用 LLMs 是具有挑战性的,主要是由于排名目标和 LLM 预训练目标之间存在重大差异。此外,LLMs 的推理和令牌成本在实时搜索场景中可能是非常高的。

``` ### 逐句翻译及解释 1. **English:** LLMs have been extensively used in the Retrieval Augmented Generation (RAG) framework, where they are used in the content generation step to make sense of the retrieved passages and generate coherent responses/summaries. - **Chinese:** LLMs 在检索增强生成(RAG)框架中被广泛使用,在内容生成步骤中用于理解检索到的段落并生成连贯的回复/总结。 2. **English:** However, their effectiveness in the “retrieval” portion, i.e. as re-ranking agents, has not been thoroughly investigated because it is challenging to apply LLMs in this context due to major differences between the ranking objective and LLM pre-training objectives. - **Chinese:** 然而,它们在“检索”部分的有效性,即作为重新排序代理的有效性,还没有被彻底研究,因为在这个背景下应用 LLMs 是具有挑战性的,主要是由于排名目标和 LLM 预训练目标之间存在重大差异。 3. **English:** Additionally, the inference and token cost of LLMs can be prohibitive in real-time search scenarios. - **Chinese:** 此外,LLMs 的推理和令牌成本在实时搜索场景中可能是非常高的。 如果有其他需求或修改意见,请告诉我!Sure, here's the translated text in simplified Chinese, while keeping the HTML structure: ```html

作者通过以下问题探讨这些挑战:

- LLM 在重新排序搜索结果方面与微调模型相比有多有效?

- 我们能否将 LLM 的知识提炼到更小的模型中,以实现高效推断?

作者通过以下问题探讨这些挑战:

- LLM 在重新排序搜索结果方面与微调模型相比有多有效?

- 我们能否将 LLM 的知识提炼到更小的模型中,以实现高效推断?

Sure, here is the translation of "Results at a glance" in simplified Chinese while keeping the HTML structure: ```html 一览结果 ``` In this translation: - `` is used to maintain the inline nature of the text. - "一览结果" translates to "Results at a glance" in simplified Chinese.

Sure, here's the translated text in simplified Chinese, while keeping the HTML structure intact:

```html

```html

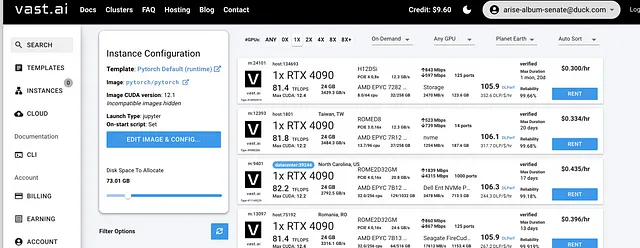

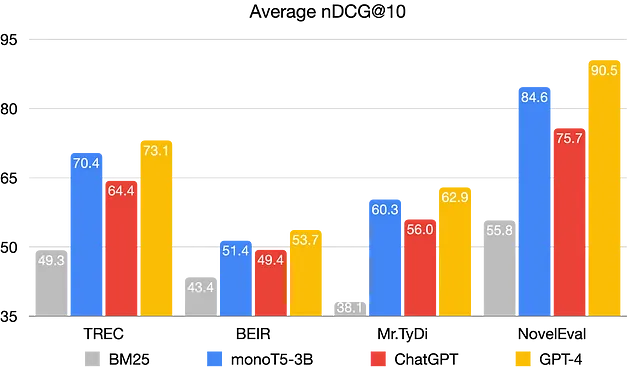

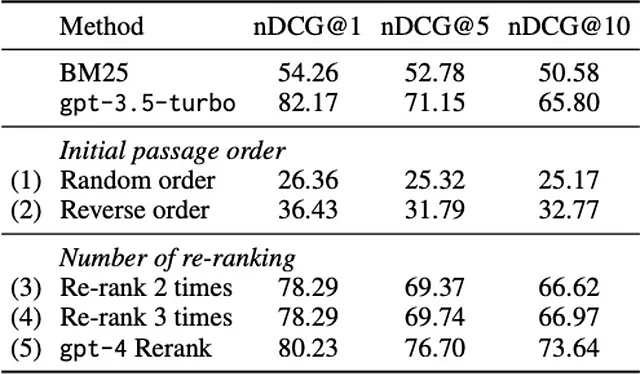

这些结果是使用排列生成方法(下文讨论)获得的,并显示GPT-4在所有数据集上优于monoT5-3B。ChatGPT,比GPT-4小的模型,在零-shot设置中表现良好,表明LLMs可以作为有效的重新排序代理。

```

```html

Figure 2 显示了经过知识蒸馏的学生模型的性能,与微调模型进行了比较。学生模型(绿线)在训练了部分训练数据后,展示出与甚至优于微调模型的性能,显示了所提出的知识蒸馏方法的有效性(下文讨论)。同一模型(DeBERTa-Large),直接在MS MARCO数据集上进行微调(灰线),其性能显著低于学生模型、置换蒸馏模型。有趣的是,增加参数数量比增加训练样本数量更有帮助,并且性能在几千个查询后就会饱和(尽管这仍然是大量的成对示例,如训练目标部分所讨论的)。

``` This HTML snippet preserves the structure while presenting the translated text in simplified Chinese.Sure, here's the translation of "Prompting an LLM for re-ranking" in simplified Chinese while keeping the HTML structure: ```html

触发LLM进行重新排名

``` This HTML snippet retains the structure while presenting the translated text.

Sure, here's the translated text in simplified Chinese while keeping the HTML structure intact:

```html

Certainly! Here's the translated text in simplified Chinese, keeping the HTML structure: ```html

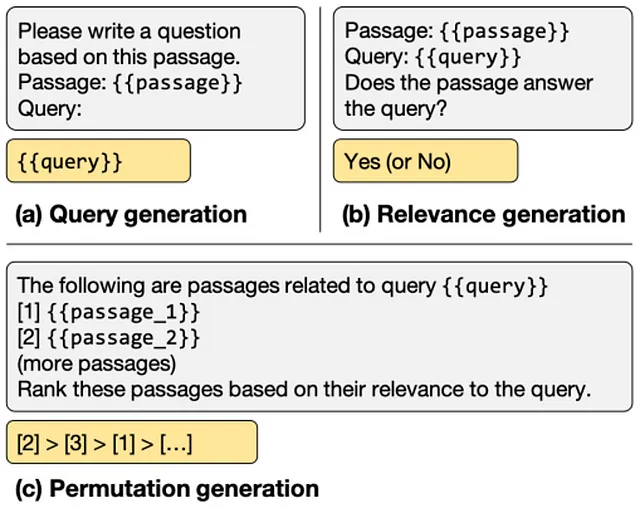

这些方法的缺点在于它们不直接适用于排名;特定的查询-段落对是否相关并不有助于将该段落与其他段落进行排名。为了获得排名,这些方法会依赖模型中的对数概率分数,以评估每个段落对查询的相关性,并使用模型的置信分数作为代理排名分数。不幸的是,由于许多语言模型被隐藏在 API 后面,无法直接访问这些分数。

``` This HTML structure will ensure that the text is presented clearly and correctly formatted in simplified Chinese.Sure, here is the translation in simplified Chinese while keeping the HTML structure intact: ```html

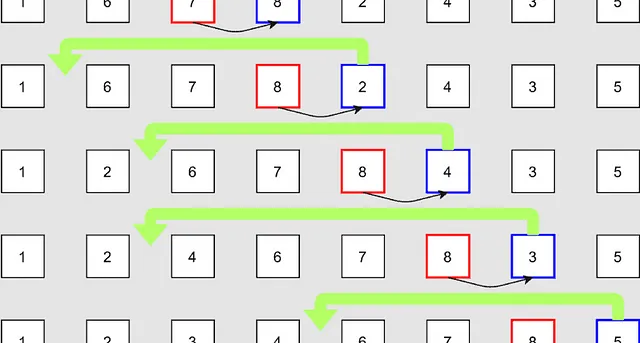

为了解决这些问题,本文引入了一种称为排列生成的新型提示方法,具体步骤如下:

- 使用BM25检索前k个候选段落

- 使用图3中c)部分的提示,在LLM中要求以窗口大小w对候选段落进行排名,其中w为窗口大小,从列表末尾开始

- 使用步长s从后向前滑动窗口,其中s等于w/2,以确保LLM在前一个窗口中排名靠前的段落的前一半被包括在下一个窗口中

- 重复此过程直到窗口达到列表的前端

Sure, here's the translated text in simplified Chinese while maintaining the HTML structure: ```html

这种方法的一个关键优点是,它允许LLM直接生成排名,无需依赖对数概率分数,而窗口策略则通过将排名任务分解为较小的子任务,有助于克服LLM的标记限制。

``` This HTML snippet translates to: "这种方法的一个关键优点是,它允许LLM直接生成排名,无需依赖对数概率分数,而窗口策略则通过将排名任务分解为较小的子任务,有助于克服LLM的标记限制。"```html

然而,这种方法的一个缺点是模型有可能在不同窗口中生成不一致的结果。图9显示了Davinci-003、GPT-3.5-turbo和GPT-4中这种情况发生的频率。另一个缺点是这种方法会增加API调用次数、令牌成本和推理时间。

```Sure, here's the translation of "Tuning — Sliding window hyperparameters" in simplified Chinese, while keeping the HTML structure intact: ```html 调参 — 滑动窗口超参数 ```

```html

作者们尝试了不同的窗口大小和步长来找到最佳配置。他们在TREC-DL19数据集上使用GPT-3.5-turbo-16k测试了这些超参数,并选择了窗口大小为20、步长为10以获得最高的nDCG@10分数。有趣的是,窗口大小为40、步长为20的配置在nDCG@5和nDCG@1上表现更好。

```Sure! Here’s the translation of “Knowledge distillation for efficient inference” into Simplified Chinese, while keeping the HTML structure: ```html 知识蒸馏以实现高效推理 ``` Feel free to use this translation in your HTML documents or any other context where you need it! If you need any more translations or have other questions, just let me know.

Sure, here is the translated text in simplified Chinese, keeping the HTML structure: ```html

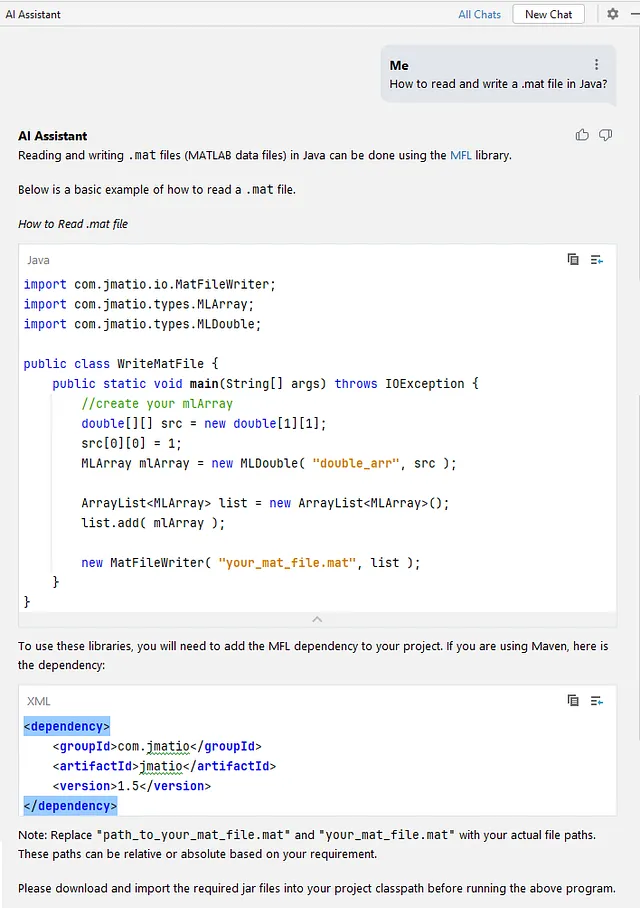

使用语言模型(LLMs)进行重新排序在推断时间和标记成本上昂贵,并且有点像用大锤砸核桃。为了解决这个问题,作者提出了一种称为排列蒸馏的知识蒸馏方法,用于训练更小、更高效的模型,这些模型可以执行与精调模型相当的重新排序任务。即使对于像ChatGPT这样不公开其内部对数概率分数的黑盒模型,该方法也是有效的。

``` This HTML snippet preserves the structure while presenting the translated text in simplified Chinese.Certainly! Here's the translation of "Approach at a glance:" in simplified Chinese, while maintaining HTML structure: ```html 一览方法: ```

- Sure, here's the text translated into simplified Chinese while maintaining the HTML structure: ```html Sample N (10k) queries from the MS MARCO dataset ``` Translated text in simplified Chinese: ```html 从MS MARCO数据集中抽取N(10k)个查询样本 ```

- Certainly! Here's the translated text in simplified Chinese, while keeping the HTML structure intact: ```html 检索每个查询的前 M (20) 个候选段落,使用 BM25 算法。 ``` In this HTML snippet, the translated text appears in Chinese characters while maintaining the HTML tags for structure.

- Sure, here's how you can write that in simplified Chinese while keeping the HTML structure: ```html 使用排列生成技术来生成使用ChatGPT(教师模型)的排名通道列表。 ``` In this HTML snippet, the text "使用排列生成技术来生成使用ChatGPT(教师模型)的排名通道列表。" is the translated version of "Use permutation generation to produce ranked list of passages using ChatGPT (teacher model)" in simplified Chinese.

- Sure, here's the translation in simplified Chinese while keeping the HTML structure: ```html Train the student model by reducing a pairwise loss function between the student and teacher outputs ``` Translated to simplified Chinese: ```html 通过减少学生和教师输出之间的成对损失函数来训练学生模型 ```

```html

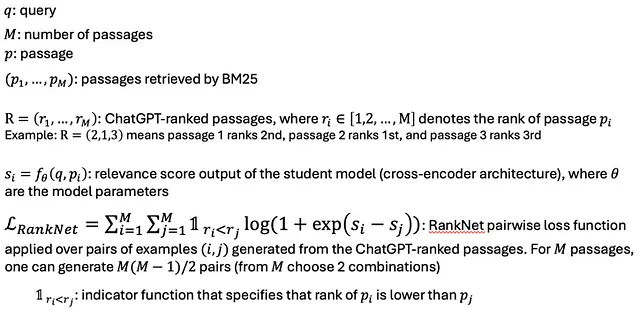

上述是排列蒸馏方法培训目标的概述。学生模型被训练来最小化RankNet损失,[7]即其输出与教师模型输出之间的成对损失,后者是候选段落的排名。成对示例是通过获取教师模型排名的所有段落的成对组合并过滤这些组合中第一个段落排名高于第二个段落来创建的。这导致每个查询总共有M(M-1)/2个训练示例,其中M是候选段落数量。基于这一点,尽管作者只从MS MARCO数据集中采样了10k个查询,他们最终为学生模型得到了190万个成对训练示例(对于M = 20)。

```Certainly! Here's how you can write "Additional Experiments, Ablations, and Details" in simplified Chinese within an HTML structure: ```html

额外实验、消融和细节

``` In this HTML snippet: - `` is used to denote a paragraph in HTML, which is semantically appropriate for a short piece of text like a heading or title. - "额外实验、消融和细节" translates to "Additional Experiments, Ablations, and Details" in simplified Chinese.

Certainly! Here is the translated text in simplified Chinese, keeping the HTML structure: ```html

本文包括了大量额外的实验和消融实验,以验证所提出的方法,并更好地理解其中的基本机制。在这里,我们将简要介绍一些有趣的实验。

``` This HTML snippet contains the translated text: ```html本文包括了大量额外的实验和消融实验,以验证所提出的方法,并更好地理解其中的基本机制。在这里,我们将简要介绍一些有趣的实验。

``` In this translation: - "本文" translates to "this paper". - "大量额外的实验和消融实验" translates to "a lot of additional experiments and ablations". - "验证所提出的方法" translates to "to validate the proposed methods". - "更好地理解其中的基本机制" translates to "to understand the underlying mechanisms better". - "在这里" translates to "here". - "我们将简要介绍一些有趣的实验" translates to "we'll touch on a few of the interesting ones".Sure, here's the translation of "Experiment — Fine-tuned vs. knowledge-distilled models: student surpasses teacher" in simplified Chinese while maintaining the HTML structure: ```html 实验 — 微调对抗知识蒸馏模型:学生超越老师 ```

```html

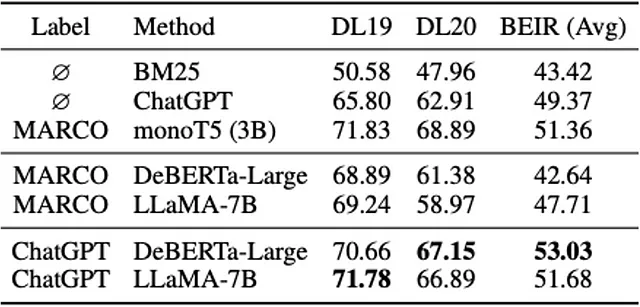

在图5中,作者展示了BM25、ChatGPT、经过微调的monoT5-3B、经过微调的DeBERTa-Large和经过微调的LLaMA-7B模型与使用ChatGPT排名训练的置换蒸馏学生模型的性能对比。结果显示,学生模型在DL19、DL20和平均BEIR基准上超过了教师模型(ChatGPT),并且在与经过微调的monoT5-3B模型持平的同时,超过了经过微调的DeBERTa-Large和LLaMA-7B模型。这表明置换蒸馏方法在训练更小、更强大和更高效的重排序任务模型方面的有效性。有趣的是,7B参数的LLaMA模型在显著优于4.35亿参数的DeBERTa-Large模型方面表现不明显。作者在其代码库中提供了蒸馏的学生模型和训练代码的下载链接:https://github.com/sunnweiwei/RankGPT。

```Sure, here's how you can write "Experiment — LLM comparison" in simplified Chinese while keeping the HTML structure intact: ```html 实验 — LLM比较 ``` This HTML snippet will display "实验 — LLM比较" on a webpage, where "实验" means "Experiment" and "LLM比较" means "LLM comparison" in simplified Chinese.

Certainly! Here's the HTML structure with the translated text in simplified Chinese:

```html

Sure, here's the translation of "Experiment — LLM prompting comparison (OpenAI models)" into simplified Chinese, keeping the HTML structure: ```html 实验 — LLM 提示比较(OpenAI 模型) ``` In this translation: - "实验" means "Experiment". - "LLM" is kept as is for abbreviation. - "提示比较" means "prompting comparison". - "(OpenAI 模型)" means "(OpenAI models)".

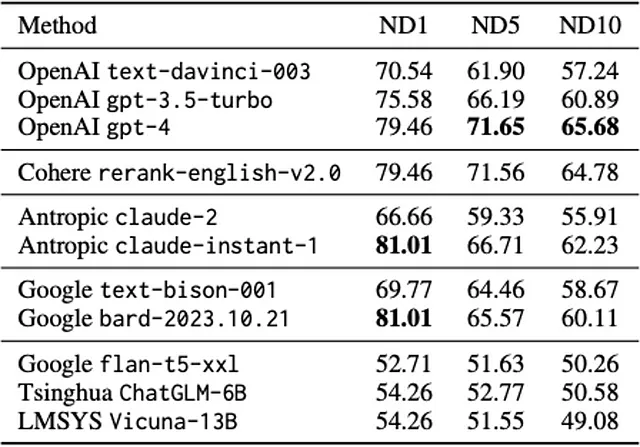

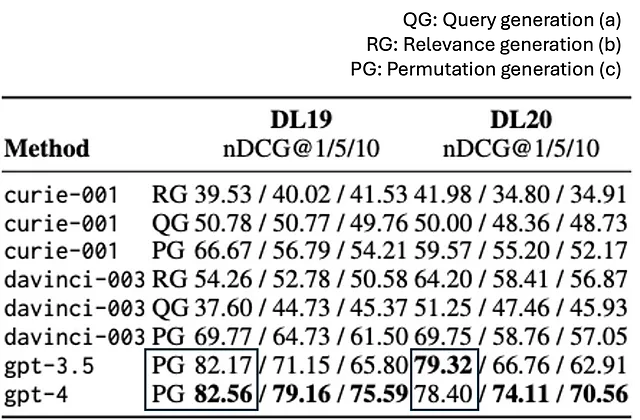

```html 在图7中,作者比较了各种 OpenAI LLM 端点(GPT-3.5、GPT-4、Davinci-003 和 Curie-001),使用了之前介绍的不同指令策略(即查询生成、相关性生成和排列生成)。结果显示,排列生成(PG)方法在所有 LLM 上均持续优于其他两种方法,并且对 nDCG@1 有显著提升。作者推测,“LLM 通过阅读多个可能互补信息的段落,对查询和段落有了更全面的理解,从而提高了模型的排序能力”,作为解释 PG 性能的理由。 ```

Certainly! Here is the translated text in simplified Chinese: ```html

从这些结果中另一个有趣的观察是,尽管在nDCG@5和nDCG@10上GPT-4表现更好(见图5中的方框),但在nDCG@1上,GPT-3.5和GPT-4的表现是可比较的。此外,尽管Davinci-003和GPT-3.5大小相似,但Davinci-003的表现要差得多,作者认为这是因为Davinci-003更容易在重新排名过程中产生不一致的结果,即错过段落。

``` This HTML structure preserves the text content while allowing it to be displayed on a web page with proper formatting.Sure, here's the translated text in simplified Chinese while maintaining the HTML structure: ```html

``` Translated text: ```htmlAblation — Sensitivity to initial passage order

```消融 — 对初始通道顺序的敏感性

```html

在图8中,作者们研究了排列生成方法对初始通行顺序的敏感性。他们发现,模型的性能对由BM25生成的初始通行顺序非常敏感。当初始通行顺序被随机化时,这种方法的性能显著下降,表明初始通行顺序的质量对排列生成方法的成功至关重要。此外,随机顺序(1)实际上导致的性能比逆序(2)更差,这一点很有趣,可能需要进一步研究。在逆序(2)情况下,模型必须将最相关的通行从顺序的末尾向前携带,而在滑动窗口之间不丢弃它们,这在概念上使任务变得更加困难。然而,目前尚不清楚为什么随机顺序(1)的表现比逆序(2)更差。

``` This HTML structure includes the translated text in simplified Chinese.Sure, here is the translation in simplified Chinese while maintaining HTML structure: ```html LLM 错误分析 ```

Certainly! Here's the HTML structure with the translated text in simplified Chinese: ```html

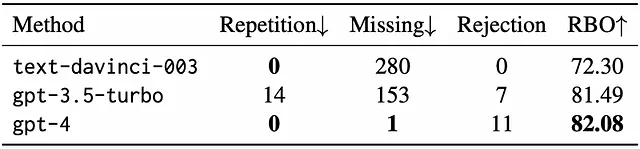

在图9中,作者们量化了LLM输出的不一致或错误频率,分为以下几类:

- 重复:排名中出现相同的段落标识符

- 缺失:排名中缺少一个或多个段落

- 拒绝:模型拒绝了提示并拒绝排名段落

- RBO:基于排名的重叠 — 一种衡量两个排名相似性的方法。模型在不同窗口之间的排名一致性如何?

To translate the given text into simplified Chinese while keeping the HTML structure intact, you can use the following: ```html

结果显示,对于Davinci-003和GPT-3.5-turbo来说,最常见的错误是缺失的段落;而对于GPT-4来说,最常见的错误是拒绝请求。总体而言,GPT-4的RBO略高于GPT-3.5-turbo,使其成为三者中最一致且最少出错的模型。然而,由于成本考虑,作者们使用了ChatGPT的相关性判断来生成他们的训练数据。

``` This HTML snippet will display the translated text in simplified Chinese, maintaining the original structure of the content.Sure, here's how you can write "Thoughts and Takeaways" in simplified Chinese within an HTML structure: ```html 思考与收获 ``` This HTML snippet retains the structure while displaying the translated text in simplified Chinese characters.

Certainly! Here is the HTML structure with the translated text in simplified Chinese: ```html

在TR Labs,我和其他应用科学家同事们很喜欢阅读这篇论文,并就其优点进行了热烈讨论,探讨如何将其中的一些方法应用到我们自己的工作中。以下是我们讨论的一些关键观点和想法:

``` Translated text in simplified Chinese: "在TR Labs,我和其他应用科学家同事们很喜欢阅读这篇论文,并就其优点进行了热烈讨论,探讨如何将其中的一些方法应用到我们自己的工作中。以下是我们讨论的一些关键观点和想法:"- Sure, here's the translated text in simplified Chinese while keeping the HTML structure: ```html 在科学环境中,我们面对着与黑匣子LLM合作的挑战,本文提供了一些令人信服的证据(以及有效的策略),用于提炼这些黑匣子LLM成更小、更实用的模型,可用于实时搜索场景,无需模型评分。 ``` This HTML structure surrounds the translated Chinese text, maintaining the integrity of the original format.

Sure, here's the translated text in simplified Chinese while keeping the HTML structure: ```html 在实验室,我们正在探索置换提炼技术,以改进段落重新排序功能,并且我们正在将置换生成作为使用LLM生成训练数据以供重新排序模型的新方法加入到我们的工具包中。 ``` This text maintains the structure for easy integration into HTML while presenting the translation accurately.

- ```html

除了在没有模型分数的情况下进行工作的挑战之外,对LLMs进行研究的另一个问题是它们的训练数据缺乏透明度。作者们引入了一个新的基准数据集,NovelEval,部分原因是担心LLMs的训练数据可能存在潜在的数据泄漏问题。在评估LLMs的输出时,需要特别注意这一点。

```

Sure, here is the translation of the text into simplified Chinese, keeping the HTML structure intact: ```html

在实验室,我们不断设计和评估新的评分任务,以估计我们检索系统的质量,并依赖我们的学科专家为这些任务提供高质量的注释。

``` This HTML snippet contains the translated text: ```html在实验室,我们不断设计和评估新的评分任务,以估计我们检索系统的质量,并依赖我们的学科专家为这些任务提供高质量的注释。

```- Certainly! Here's the translated text in simplified Chinese, keeping the HTML structure intact:

```html

虽然这篇论文展示了令人印象深刻的结果并且相当详细,但是学生模型似乎还有进一步优化的空间。例如,可以在除了从MS MARCO抽样查询的数据集上,用LLM-ranks来训练学生模型,或者探索数据增强方法以提升学生模型的鲁棒性。

``` This HTML snippet contains the translated text in simplified Chinese, formatted as a paragraph.

Sure, here's the translated text in simplified Chinese while keeping the HTML structure intact: ```html 在实验室中,我们正在尝试其他更具领域特定的查询,并探索额外的超参数,例如通过排名对训练样本进行阈值处理。 ``` This translation maintains the content's meaning and structure as requested.

- ```html

最后,而且最重要的是,虽然作者选择在一个众所周知的数据集(MS MARCO)上训练学生模型作为比较的基础,但这些结果表明,使用语言模型(LLMs)作为任何查询集合的教师模型可能是有效的,从而打开了在特定用例、非英语语言甚至专业领域中提升信息检索性能的可能性。

``` This HTML snippet contains the translated text in simplified Chinese while maintaining the HTML structure.

Sure, here is the translated text in simplified Chinese while keeping the HTML structure: ```html

在实验室,我们很高兴将这些技术纳入我们自己的研发工作中,并且期待看到它们如何可能改善我们现有的系统。

``` Translated text: 在实验室,我们很高兴将这些技术纳入我们自己的研发工作中,并且期待看到它们如何可能改善我们现有的系统。Certainly! Here is the translation of the text into simplified Chinese, while keeping the HTML structure: ```html

💬 您对这篇文章有什么想法?请在评论中告诉我们!

``` In this HTML snippet: - `` is used to enclose the translated text, representing a paragraph in HTML. - The text "💬 您对这篇文章有什么想法?请在评论中告诉我们!" is the translation of "💬 What were your thoughts about this paper? Let us know in the comments!"

Sure, here's the translation of the citation into simplified Chinese, keeping the HTML structure intact: ```html [1] Sun, W., et al. (2022). Is ChatGPT Good at Search? Investigating Large Language Models as Re-Ranking Agents. ArXiv, abs/2304.09542. ``` Translated into simplified Chinese: ```html [1] 孙W.等人(2022)。ChatGPT在搜索中表现如何?探究大语言模型作为重新排序代理的效果。ArXiv,abs/2304.09542。 ``` This maintains the citation format while translating the title and relevant details into Chinese.

Certainly! Here's how you can structure the HTML to display the translated citation in simplified Chinese: ```html

[2] Craswell, N., et al. (2020). TREC 2020深度学习赛道概述. ArXiv, abs/2102.07662.

``` In this HTML snippet: - `TREC 2020深度学习赛道概述` encapsulates the translated title in simplified Chinese. - Ensure the text "TREC 2020深度学习赛道概述" accurately represents the translated title provided. This approach maintains the structure and integrity of the HTML while including the translation in the appropriate language markup (`lang="zh-Hans"` for simplified Chinese).Certainly! Here's the text translated to simplified Chinese while keeping the HTML structure intact: ```html [3] Thakur, N., et al. (2021). BEIR: A Heterogenous Benchmark for Zero-shot Evaluation of Information Retrieval Models. NeurIPS 2021. ``` In simplified Chinese, it would be: ```html [3] Thakur, N., 等人 (2021). BEIR: 一个用于零样本评估信息检索模型的异构基准. NeurIPS 2021. ``` The translated text has been placed within a `` tag to maintain the original HTML structure.

Sure, here's the text translated into simplified Chinese, maintaining the HTML structure: ```html [4] 张晓等人 (2021)。Mr. TyDi: 一种用于稠密检索的多语言基准。MRL 2021。 ``` In this translation: - "张晓等人" corresponds to "Zhang, X., et al." - "Mr. TyDi: 一种用于稠密检索的多语言基准" corresponds to "Mr. TyDi: A Multilingual Benchmark for Dense Retrieval" - "MRL 2021" remains the same for the conference name and year.

Sure, here is the text translated to simplified Chinese, while keeping the HTML structure intact: ```html

[5] Sachan, D. S., et al. (2022). 提升段落检索与零-shot 问题生成. EMNLP 2022.

```Sure, here's the translation of the citation into simplified Chinese within an HTML structure: ```html [6] Liang, P., 等. (2022). 语言模型的整体评估. ArXiv, abs/2211.09110. ``` In this translation: - "等." is used to abbreviate "et al." in Chinese, meaning "and others" or "et al." - "语言模型的整体评估" translates to "Holistic evaluation of language models." - The rest remains in English as it is commonly recognized and used in citations. This format preserves the original structure while incorporating the Chinese translation seamlessly.

Sure, here's the translation of the citation into simplified Chinese, keeping the HTML structure intact: ```html [7] Burges, C. J. C., et al. (2005). 学习排序使用梯度下降。ICML 2005. ``` This HTML structure ensures that the citation format is maintained while displaying the translated text in simplified Chinese.

Certainly! Here's the text "Further Reading/References on Knowledge Distillation of LLMs" translated into simplified Chinese, while keeping the HTML structure: ```html LLMs知识蒸馏的进一步阅读/参考资料 ``` This HTML snippet maintains the structure and incorporates the translated text into simplified Chinese.

Sure, here's how you could represent "Distilling reasoning ability" in simplified Chinese while keeping HTML structure: ```html 提炼推理能力 ``` In this HTML snippet: - `` is used to define a section in a document. - `提炼推理能力` is the simplified Chinese translation of "Distilling reasoning ability".

Sure, here's the translated text in simplified Chinese while keeping the HTML structure intact: ```html [8] Fu, Y., 等人. (2023). 将较小的语言模型专门用于多步推理. ICML 2023. ``` This maintains the citation format and translates the title of the paper and the conference name into simplified Chinese.

Sure, here's the translation of the citation in simplified Chinese within an HTML structure: ```html

[9] Magister, L. C., et al. (2023). 教授小型语言模型进行推理. ACL 2023.

```Certainly! Here is the translation of "Self-instruct" into simplified Chinese while maintaining the HTML structure: ```html 自我教导 ```

Certainly! Here's the translated text in simplified Chinese, keeping the HTML structure: ```html [10] 王宇等人 (2023b)。Self-instruct: Aligning language model with self generated instructions. ACL 2023。 ``` In this HTML snippet: - `` is used to encapsulate the citation information. - `[10]` remains in English as it is typically used universally for citations. - 王宇 (Wang, Y.) is the translated name in Chinese. - "等人" (et al.) signifies "et al." in Chinese, indicating multiple authors. - "Self-instruct: Aligning language model with self generated instructions." is translated into Chinese. - "ACL 2023." remains in English as it is an abbreviation that is commonly used in citations. This structure ensures the citation is properly formatted while the translated text appears correctly in simplified Chinese.

Certainly! Here's the translation of the text into simplified Chinese, keeping the HTML structure intact: ```html

[11] Taori, R., et al. (2023). Stanford alpaca: 一个指令跟随的羊驼模型. https://github.com/tatsu-lab/stanford_alpaca

``` In simplified Chinese: ```html[11] Taori, R., 等人. (2023). Stanford alpaca: 一个指令跟随的羊驼模型. https://github.com/tatsu-lab/stanford_alpaca

``` This translation maintains the citation and the hyperlink in the original text while providing the Chinese translation for "Stanford alpaca: An instruction-following llama model."Here's the translation of the text while keeping the HTML structure intact: ```html 使用大型语言模型生成来改进检索系统 ```

Certainly! Here's the translation in simplified Chinese while keeping the HTML structure intact: ```html [12] Sachan, D. S., 等人. (2023). 问题是训练密集段落检索器所需的一切. TACL 2023. ``` This HTML snippet retains the citation format and translates the title of the paper and the names accordingly.

Sure, here's how you can present that reference in HTML with the citation translated into simplified Chinese: ```html

[13] Shi, W., et al. (2023). Replug: Retrieval-augmented black-box language models. ArXiv, abs/2301.12652.

``` Translated into simplified Chinese: ```html[13] 史炜等人(2023年)。Replug:检索增强的黑盒语言模型。ArXiv,abs/2301.12652。

``` This maintains the HTML structure while providing the citation in simplified Chinese.