Sure, here's the translation of the text into simplified Chinese while keeping the HTML structure: ```html 最佳LLM推理引擎?TensorRT vs vLLM vs LMDeploy vs MLC-LLM ``` In this HTML snippet: - `` is used to indicate a section of text that needs to be styled or marked up. - `最佳LLM推理引擎?TensorRT vs vLLM vs LMDeploy vs MLC-LLM` represents the translated text in simplified Chinese characters.

Sure, here's the translation of "Benchmarking various LLM Inference Engines" into simplified Chinese while maintaining HTML structure: ```html Benchmarking various LLM 推理引擎. ``` In this HTML snippet: - `LLM 推理引擎` is the translation of "LLM Inference Engines" into simplified Chinese. - `lang="zh-CN"` indicates the language code for simplified Chinese.

在文本生成应用中,如聊天和代码补全模型方面,大型语言模型(LLMs)表现出色,能够实现高度理解和流畅性。然而,它们庞大的尺寸也带来了推断方面的挑战。基本的推断速度较慢,因为LLMs逐令牌生成文本,需要为每个下一个令牌重复调用。随着输入序列的增长,处理时间也会增加。此外,LLMs拥有数十亿的参数,使得在内存中存储和管理所有这些权重变得困难。

Sure, here is the HTML structure with the translated text in simplified Chinese: ```html

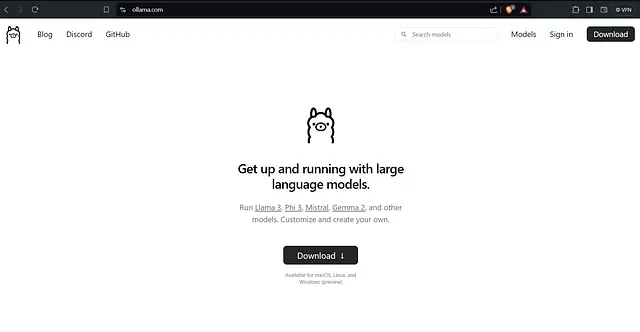

在优化LLM推理和服务的努力中,有多个框架和包。在本博客中,我将使用并比较以下推理引擎:

``` Translated text in simplified Chinese: "在优化LLM推理和服务的努力中,有多个框架和包。在本博客中,我将使用并比较以下推理引擎"- To translate "TensorRT-LLM" to simplified Chinese while keeping HTML structure intact, you would write: ```html TensorRT-LLM ``` This ensures the text "TensorRT-LLM" is marked as simplified Chinese within an HTML context.

- To translate "vLLM" to simplified Chinese while keeping the HTML structure intact, you would use the following: ```html vLLM ``` This HTML snippet ensures that "vLLM" is displayed as simplified Chinese text on a webpage, assuming the browser supports displaying Chinese characters.

- To translate "LMDeploy" into simplified Chinese while keeping the HTML structure, you would write: ```html LM部署 ``` In this translation: - "LM" stands for whatever "L" and "M" represent in your context (could be initials or an abbreviation). - "部署" means "Deploy" in Chinese. So, "LM部署" directly translates "LMDeploy" into simplified Chinese, maintaining the HTML structure as requested.

- To translate "MLC-LLM" into simplified Chinese while keeping the HTML structure, you would use the following: ```html MLC-LLM ``` This ensures that the text "MLC-LLM" is marked as simplified Chinese language content within an HTML document.

To translate "TensorRT-LLM" into simplified Chinese while keeping the HTML structure intact, you can use the following: ```html TensorRT-LLM ``` This HTML code specifies that "TensorRT-LLM" should be displayed in simplified Chinese.

To translate "Introduction" into simplified Chinese while keeping the HTML structure, you would use the following: ```html

介绍

``` This HTML snippet preserves the structure of a paragraph (`` tag) and replaces "Introduction" with its simplified Chinese equivalent "介绍".

```html

TensorRT-LLM 是另一个推理引擎,用于加速和优化最新的 NVIDIA GPU 上的大语言模型的推理性能。大语言模型被编译为 TensorRT 引擎,然后通过 Triton 服务器部署,以利用推理优化,如飞行批处理(减少等待时间并允许更高的 GPU 利用率)、分页 KV 缓存、多GPU-多节点推理和FP8支持。

```Sure, the translation of "Usage" to simplified Chinese while keeping the HTML structure intact would be: ```html 使用 ``` This HTML code preserves the text "Usage" but displays the translated Chinese equivalent "使用".

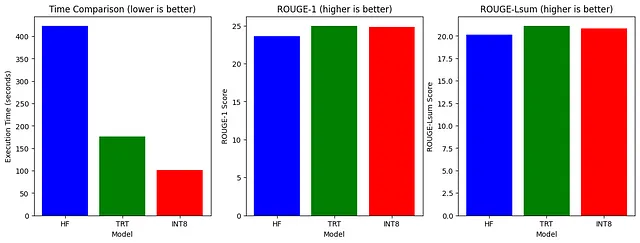

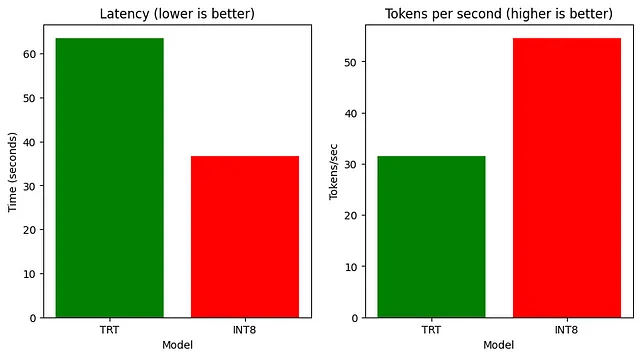

Sure, here's the translation of your text into simplified Chinese while keeping the HTML structure: ```html 我们将比较HF模型、TensorRT模型和TensorRT-INT8模型(量化)在执行时间、ROUGE分数、延迟和吞吐量方面的表现。 ``` This translation maintains the structure and accurately conveys the intended meaning in simplified Chinese.

Sure, here is the translation of the text into simplified Chinese while keeping the HTML structure intact: ```html 需要为您的Linux系统安装Nvidia-container-toolkit,初始化Git LFS(用于下载HF模型),然后按以下步骤下载必要的软件包: ``` This HTML structure maintains the integrity of the text while presenting it in simplified Chinese.

!curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

!apt-get update

!git clone https://github.com/NVIDIA/TensorRT-LLM/

!apt-get update && apt-get -y install python3.10 python3-pip openmpi-bin libopenmpi-dev

!pip3 install tensorrt_llm -U --pre --extra-index-url https://pypi.nvidia.com

!pip install -r TensorRT-LLM/examples/phi/requirements.txt

!pip install flash_attn pytest

!curl -s https://packagecloud.io/install/repositories/github/git-lfs/script.deb.sh | bash

!apt-get install git-lfs

请现在获取模型权重

PHI_PATH="TensorRT-LLM/examples/phi"

!rm -rf $PHI_PATH/7B

!mkdir -p $PHI_PATH/7B && git clone https://huggingface.co/microsoft/Phi-3-small-128k-instruct $PHI_PATH/7B

To translate the text "Convert the model into TensorRT-LLM checkpoint format and build the TensorRT-LLM from the checkpoint." into simplified Chinese, while keeping HTML structure intact, you can use the following: ```html 将模型转换为TensorRT-LLM检查点格式,并从检查点构建TensorRT-LLM。 ``` This HTML snippet maintains the original structure of the text while providing the translation in simplified Chinese.

!python3 $PHI_PATH/convert_checkpoint.py --model_dir $PHI_PATH/7B/ \

--dtype bfloat16 \

--output_dir $PHI_PATH/7B/trt_ckpt/bf16/1-gpu/

# Build TensorRT-LLM model from checkpoint

!trtllm-build --checkpoint_dir $PHI_PATH/7B/trt_ckpt/bf16/1-gpu/ \

--gemm_plugin bfloat16 \

--output_dir $PHI_PATH/7B/trt_engines/bf16/1-gpu/

Sure, here's the translated text in simplified Chinese while keeping the HTML structure: ```html 类似地,现在将 INT8 仅权重量化应用于 HF 模型,并将检查点转换为 TensorRT-LLM。 ``` This HTML structure ensures the text is presented clearly and maintains its formatting.

!python3 $PHI_PATH/convert_checkpoint.py --model_dir $PHI_PATH/7B \

--dtype bfloat16 \

--use_weight_only \

--output_dir $PHI_PATH/7B/trt_ckpt/int8_weight_only/1-gpu/

!trtllm-build --checkpoint_dir $PHI_PATH/7B/trt_ckpt/int8_weight_only/1-gpu/ \

--gemm_plugin bfloat16 \

--output_dir $PHI_PATH/7B/trt_engines/int8_weight_only/1-gpu/

Sure, here's the translation in simplified Chinese, keeping the HTML structure intact: ```html 现在在摘要任务上测试基础的phi3和两个TensorRT模型 ``` This translation maintains the original structure and content while converting the English text into simplified Chinese.

%%capture phi_hf_results

# Huggingface

!time python3 $PHI_PATH/../summarize.py --test_hf \

--hf_model_dir $PHI_PATH/7B/ \

--data_type bf16 \

--engine_dir $PHI_PATH/7B/trt_engines/bf16/1-gpu/

%%capture phi_trt_results

# TensorRT-LLM

!time python3 $PHI_PATH/../summarize.py --test_trt_llm \

--hf_model_dir $PHI_PATH/7B/ \

--data_type bf16 \

--engine_dir $PHI_PATH/7B/trt_engines/bf16/1-gpu/

%%capture phi_int8_results

# TensorRT-LLM (INT8)

!time python3 $PHI_PATH/../summarize.py --test_trt_llm \

--hf_model_dir $PHI_PATH/7B/ \

--data_type bf16 \

--engine_dir $PHI_PATH/7B/trt_engines/int8_weight_only/1-gpu/

Sure, here is the simplified Chinese translation of your text, keeping the HTML structure: ```html 现在在捕获结果后,您可以解析输出并绘制图表,以比较所有模型的执行时间、ROUGE分数、延迟和吞吐量。 ``` This translation maintains the original meaning while adhering to the HTML structure provided.

To translate "2. vLLM" into simplified Chinese while keeping the HTML structure, you can use the following: ```html 2. vLLM ``` This ensures that "vLLM" is displayed as-is in the HTML structure, while indicating that the language of the text is simplified Chinese (`zh-CN`).

To translate "Introduction" to simplified Chinese while keeping the HTML structure, you would use the following: ```html

介绍

``` This HTML snippet ensures that "Introduction" is translated to "介绍" in simplified Chinese, enclosed within a paragraph (``) tag.

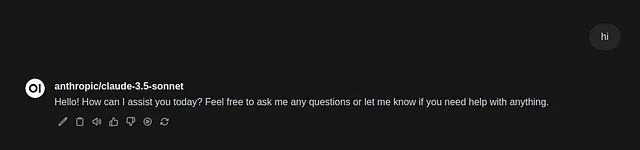

Certainly! Here's the translation of the text into simplified Chinese, keeping the HTML structure: ```html vLLM 提供具有 SOTA 吞吐量、分页注意力、连续批处理、量化(GPTQ、AWQ、FP8)和优化 CUDA 核心的 LLM 推理和服务。 ``` In this translation: - "vLLM" remains as it is for acronym consistency. - "SOTA" (State-of-the-Art) is retained as it's commonly used in technical contexts. - Technical terms like "Paged Attention," "Continuous batching," "Quantization," and "CUDA kernels" are translated to maintain their technical meanings.

To translate "Usage" to simplified Chinese while keeping the HTML structure intact, you can use the following: ```html 用法 ``` This HTML code snippet ensures that the text "Usage" is displayed as "用法" in simplified Chinese on a webpage, while also specifying the language for proper handling by browsers and other tools.

Sure, here's the translation in simplified Chinese while keeping the HTML structure: ```html

让我们评估 microsoft/Phi3-mini-4k-instruct 的吞吐量和延迟。首先设置依赖项并导入库。

```!pip install -q vllm

!git clone https://github.com/vllm-project/vllm.git

!pip install -q datasets

!pip install transformers scipy

from vllm import LLM, SamplingParams

from datasets import load_dataset

import time

from tqdm import tqdm

from transformers import AutoTokenizer

Sure, here is the text translated into simplified Chinese while keeping the HTML structure: ```html 现在让我们加载模型,并在数据集的一个小片段上生成其输出。 ``` In this translation: - "现在" means "Now". - "让我们" means "let's". - "加载模型" means "load the model". - "并" means "and". - "在" means "on". - "数据集" means "dataset". - "的一个小片段" means "a small slice of". - "生成其输出" means "generate its outputs".

dataset = load_dataset("akemiH/MedQA-Reason", split="train").select(range(10))

prompts = []

for sample in dataset:

prompts.append(sample)

sampling_params = SamplingParams(max_tokens=524)

llm = LLM(model="microsoft/Phi-3-mini-4k-instruct", trust_remote_code=True)

def generate_with_time(prompt):

start = time.time()

outputs = llm.generate(prompt, sampling_params)

taken = time.time() - start

generated_text = outputs[0].outputs[0].text

return generated_text, taken

generated_text = []

time_taken = 0

for sample in tqdm(prompts):

text, taken = generate_with_time(sample)

time_taken += taken

generated_text.append(text)

# Tokenize the outputs and calculate the throughput

tokenizer = AutoTokenizer.from_pretrained("microsoft/Phi-3-mini-4k-instruct")

token = 1

for sample in generated_text:

tokens = tokenizer(sample)

tok = len(tokens.input_ids)

token += tok

print(token)

print("tok/s", token // time_taken)

Sure, here's the translated text in simplified Chinese while keeping the HTML structure intact: ```html 让我们还通过ShareGPT数据集对模型的性能进行vLLM基准测试。 ``` This translation maintains the original meaning while converting the text into simplified Chinese.

!wget https://huggingface.co/datasets/anon8231489123/ShareGPT_Vicuna_unfiltered/resolve/main/ShareGPT_V3_unfiltered_cleaned_split.json

%cd vllm

!python benchmarks/benchmark_throughput.py --backend vllm --dataset ../ShareGPT_V3_unfiltered_cleaned_split.json --model microsoft/Phi-3-mini-4k-instruct --tokenizer microsoft/Phi-3-mini-4k-instruct --num-prompts=1000

To translate "LMDeploy" to simplified Chinese while keeping the HTML structure intact, you would use the following: ```html 3. LMDeploy ``` This ensures that "LMDeploy" is displayed as intended in simplified Chinese, within the context of your HTML document.

Sure, the translation of "Introduction" into simplified Chinese while keeping HTML structure would be:

```html

介绍

```

Here is the translated text in simplified Chinese while keeping the HTML structure intact: ```html 这个包还允许压缩、部署和提供LLM(大语言模型)的服务,同时提供高效的推理(持续批处理、块状KV缓存、动态分割与融合、张量并行、高性能CUDA内核)、有效的量化(4位推理性能比FP16高2.4倍)、轻松的分发服务器(在多台机器和卡片上部署多模型服务)和交互式推理模式(记忆对话历史并避免重复处理历史会话)。此外,它还允许对标记延迟和吞吐量、请求吞吐量、API服务器以及Triton推理服务器性能进行分析。 ``` This translation preserves the structure and conveys the content accurately in simplified Chinese.

Certainly! In simplified Chinese, "Usage" translates to "用法".

在保持HTML结构的情况下,将以下英文文本翻译成简体中文: 安装依赖并导入包。

!pip install -q lmdeploy

!pip install nest_asyncio

import nest_asyncio

nest_asyncio.apply()

!git clone --depth=1 https://github.com/InternLM/lmdeploy

%cd lmdeploy/benchmark

Sure, here is the translated text in simplified Chinese while keeping the HTML structure: ```html LMdeploy已开发了两个推理引擎TurboMind和PyTorch。 ```

Here's the translated text in simplified Chinese while keeping the HTML structure: ```html 让我们对 microsoft/Phi3-mini-128k-instruct 上的 PyTorch 引擎进行性能分析。 ```

!python3 profile_generation.py microsoft/Phi-3-mini-128k-instruct --backend pytorch

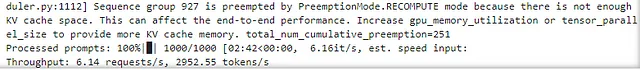

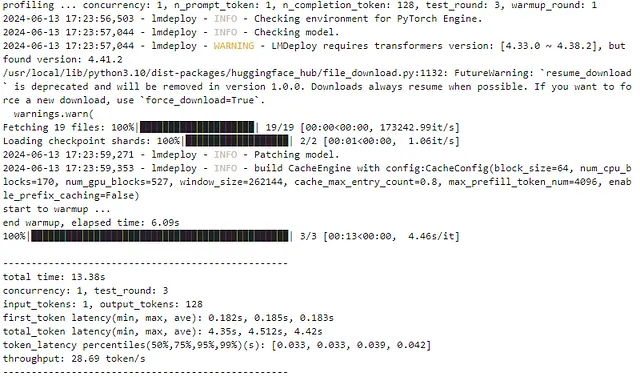

在保持HTML结构的情况下,将以下英文文本翻译成简体中文: 它在多轮中分析引擎,并报告每轮的令牌延迟和吞吐量。

Sure, here's the translation in simplified Chinese while keeping the HTML structure intact: ```html Pytorch 引擎分析令牌延迟和吞吐量 ```

To translate "4. MLC-LLM" into simplified Chinese while keeping the HTML structure intact, you would write: ```html 4. MLC-LLM ``` In this structure: - `` indicates that the content inside is in simplified Chinese. - `MLC-LLM` remains unchanged as it appears to be an acronym or a specific term.

To translate "Introduction" to simplified Chinese while keeping the HTML structure intact, you would write:

```html

介绍

```

In this HTML snippet:

- `` denotes a level 1 heading in HTML, which is commonly used for titles and headings.

- `介绍` is the simplified Chinese translation of "Introduction".

Sure, here is the translated text in simplified Chinese while keeping the HTML structure intact: ```html MLC-LLM 提供了一款高性能的部署和推理引擎,名为 MLCEngine。 ```

The translation for "Usage" in simplified Chinese is: ```html 使用方法 ```

To translate the provided English text into simplified Chinese, while keeping the HTML structure intact, you can use the following: ```html 让我们安装依赖项,包括使用 conda 设置依赖项并创建 conda 环境。然后克隆 git 存储库并配置。 ``` This HTML snippet maintains the structure and provides the translation in simplified Chinese.

conda activate your-environment

python -m pip install --pre -U -f https://mlc.ai/wheels mlc-llm-nightly-cu121 mlc-ai-nightly-cu121

conda env remove -n mlc-chat-venv

conda create -n mlc-chat-venv -c conda-forge \

"cmake>=3.24" \

rust \

git \

python=3.11

conda activate mlc-chat-venv

git clone --recursive https://github.com/mlc-ai/mlc-llm.git && cd mlc-llm/

mkdir -p build && cd build

python ../cmake/gen_cmake_config.py

cmake .. && cmake --build . --parallel $(nproc) && cd ..

set(USE_FLASHINFER ON)

conda activate your-own-env

cd mlc-llm/python

pip install -e .

Sure, here's the translation of your text into simplified Chinese while keeping the HTML structure intact: ```html 要使用MLC LLM运行模型,我们需要将模型权重转换为MLC格式。通过Git LFS下载HF模型,然后进行权重转换。 ``` This HTML code contains the translated Chinese text within a structure that could be embedded into an HTML document.

mlc_llm convert_weight ./dist/models/Phi-3-small-128k-instruct/ \

--quantization q0f16 \

--model-type "phi3" \

-o ./dist/Phi-3-small-128k-instruct-q0f16-MLC

Sure, here is the translated text in simplified Chinese while keeping the HTML structure intact: ```html 现在将您的MLC格式模型加载到MLC引擎中 ``` This HTML snippet maintains the structure while displaying the text in simplified Chinese characters.

from mlc_llm import MLCEngine

# Create engine

model = "HF://mlc-ai/Phi-3-mini-128k-instruct-q0f16-MLC"

engine = MLCEngine(model)

# Now let’s calculate throughput

import time

from transformers import AutoTokenizer

start = time.time()

response = engine.chat.completions.create(

messages=[{"role": "user", "content": "What is the Machine Learning?"}],

model=model,

stream=False,

)

taken = time.time() - start

tokenizer = AutoTokenizer.from_pretrained("microsoft/Phi-3-mini-128k-instruct")

print("tok/s", 82 // taken)

Sure, the translation of "Summary" into simplified Chinese while keeping the HTML structure intact would be: ```html 总结 ``` Here, `` is used to denote inline text and "总结" is the simplified Chinese translation of "Summary".

Here is the translated text in simplified Chinese, keeping the HTML structure: ```html

TensorRT INT8 模型在推断速度上优于 HF 模型和常规 TensorRT 模型,而常规 TensorRT 模型在总结任务中表现更好,其 ROUGE 分数最高,超过了这三个模型中的其他两个。

LMDeploy 在 A100 上的请求吞吐量比 vLLM 高出多达 1.8 倍。

``` Translated text (Chinese): ```htmlTensorRT INT8 模型在推断速度上优于 HF 模型和常规 TensorRT 模型,而常规 TensorRT 模型在总结任务中表现更好,其 ROUGE 分数最高,超过了这三个模型中的其他两个。

LMDeploy 在 A100 上的请求吞吐量比 vLLM 高出多达 1.8 倍。

``` This translation preserves the original structure and meaning while being in simplified Chinese.在保持HTML结构的前提下,将以下英文文本翻译成简体中文: 感谢QueryLoopAI赞助这些实验的计算。

Sure, here's the simplified Chinese translation of "Also, feel free to drop me a message or:": 此外,随时给我留言或者:

- 在LinkedIn和Twitter上联系和找到我

- 在 HTML 结构中,将以下英文文本翻译成简体中文: "关注我的 📚 Medium"

- Sure, here's the translation in simplified Chinese while keeping the HTML structure: ```html 订阅我的📢每周AI简讯! ``` This HTML code preserves the structure while displaying the translated text in simplified Chinese characters.

- Sure, here's how you could translate "Check out my 🤗 Hugging Face" into simplified Chinese within an HTML structure:

```html

Translate Text to Chinese Check out my 🤗 Hugging Face

``` In this example: - "Check out my 🤗 Hugging Face" is translated to "看看我的 🤗 拥抱的面孔". - The emoji 🤗 is represented using its Unicode code point `🤗` within a `` element to ensure proper display in different browsers and devices.