Sure, here's the translated HTML structure for "Transformers in Artificial Intelligence: All You Need to Know" in simplified Chinese:

```html

人工智能中的Transformer:一切你需要知道的

```

This HTML snippet maintains the structure while providing the translated title in simplified Chinese.

To translate the given English text into simplified Chinese while keeping the HTML structure intact, you can use the following: ```html

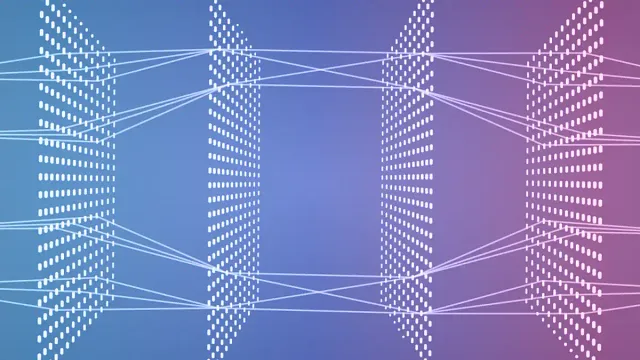

在深入理解变压器之前,我们首先将简要概述变压器的基本概念;变压器模型是一种人工智能模型,主要用于理解和生成人类语言。它被设计用于处理数据序列,例如句子中的单词,并理解每个单词在上下文中的关系。变压器模型基于注意力机制,我们将在本文稍后简要讨论注意力机制。

``` This HTML snippet includes the translated Chinese text while preserving the paragraph (``) structure.

短暂历史:Transformer架构首次推出于2017年,主要专注于语言翻译。此后,取得了显著进展,包括:

- Sure, here is the translation in simplified Chinese: 2018年10月:BERT

- To translate "February 2019: GPT-2" to simplified Chinese while keeping the HTML structure, you would write it like this:

```html

2019年2月:GPT-2

``` This HTML structure preserves the text while indicating it should be displayed as a paragraph (`` tag). The Chinese translation is straightforward, maintaining the format suitable for display on a webpage or document.

- To translate "October 2019: DistilBERT" to simplified Chinese while keeping the HTML structure, you can use the following HTML code: ```html October 2019: DistilBERT ``` In this code: - `2019` surrounds the year "2019" in simplified Chinese. - `DistilBERT` surrounds the term "DistilBERT" in simplified Chinese. This way, the translation is integrated into the HTML structure, maintaining proper formatting and language specification.

- To translate "October 2019: BART and T5" into simplified Chinese while keeping the HTML structure, you would write: ```html 十月 2019年:BART 和 T5 ``` In this translation: - "十月" means "October" - "2019年" means "2019" - "BART" remains as "BART" - "和" means "and" - "T5" remains as "T5" This maintains the structure and ensures accurate representation in simplified Chinese characters.

- 2020年5月,GPT-3

- To translate "GPT-3.5" to simplified Chinese while keeping HTML structure intact, you can use the following: ```html GPT-3.5 ``` This HTML snippet specifies that the text "GPT-3.5" should be rendered in simplified Chinese, maintaining the integrity of your HTML structure.

- To translate "LLaMA" into simplified Chinese while keeping HTML structure, you can use the following: ```html LLaMA ``` This HTML snippet uses the `` tag with the `lang` attribute set to `"zh-CN"` for simplified Chinese. It keeps the text "LLaMA" intact within the span tag, indicating it should be displayed or interpreted as simplified Chinese text.

- To translate "Falcon" into simplified Chinese while keeping HTML structure, you can use the following: ```html 猎鹰 ``` This HTML snippet ensures the translation is displayed correctly and maintains proper structure for web content.

- To translate "Codex" into simplified Chinese while keeping HTML structure intact, you would use the following code: ```html Codex ``` This HTML snippet ensures that the text "Codex" is marked as simplified Chinese language content.

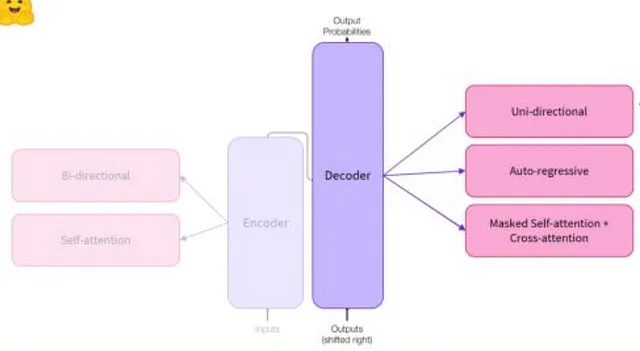

现在回到变压器,一个变压器模型有两个主要组成部分:1.编码器2.解码器

Sure, here's the translated text in simplified Chinese while keeping the HTML structure intact: ```html

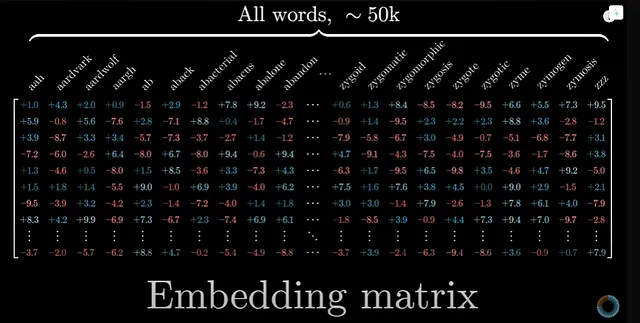

编码器:编码器为每个输入的单词输出一个数值表示。在深度学习中,所有单词都必须表示为向量。单词作为向量表示的简单示例如图所示:

``` This HTML code will display the translated text in simplified Chinese.

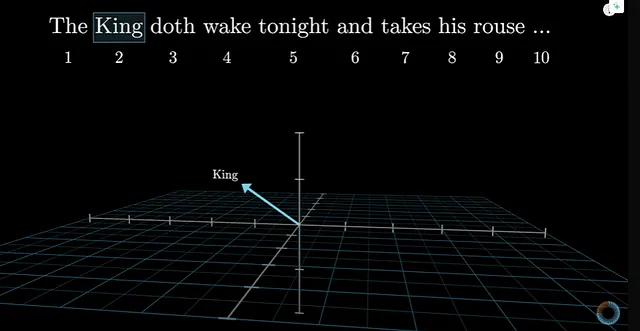

现在,重要的概念是,数值向量以一种上下文化的方式代表单词,因为这些向量不仅代表单词本身,还包括单词的上下文。例如,如下图所示,与单词“King”相关联的向量不仅仅是“King”的表示,还考虑了该词的上下文,例如左侧的词“The”和右侧的词“doth”,以及该词的位置。向量的维度由所使用的架构定义。在BERT模型中,每个向量的维度为768。

To translate the provided English text into simplified Chinese while keeping the HTML structure intact, you can use the following: ```html

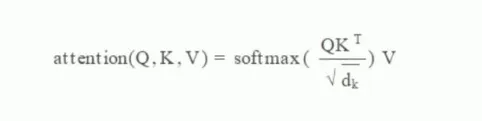

这个过程是通过自注意力机制完成的。这种机制允许我们集中注意力在输入句子的特定方面。这是通过使用一组称为“权重”的参数来完成的,这些参数确定在每个时间步骤上应该给予每个输入多少注意力。

``` This HTML code translates the text and preserves the structure for web display.

Sure, here's the translated text in simplified Chinese, keeping the HTML structure intact: ```html 这是计算单词注意力值的公式。如下图所示,对于单词“it”的上下文向量,注意力被分配给按其值排序的单词。 ``` This translation maintains the technical context while converting the English text into simplified Chinese.

Here is the translated text in simplified Chinese, maintaining the HTML structure: ```html 现在,让我们考虑一个自注意力机制的例子,比如说你想学习人工智能,在图书馆里有很多书籍选择,所以不是只选择一本书来学习人工智能,而是测试哪本书会让我对人工智能有更多了解,并根据它们的注意力值对它们进行排名,这样我们可以从多本书中获取信息(首先是书籍1,然后是书籍2,然后是书籍3等等),这就是注意力机制的工作方式。 ``` This translation preserves the original meaning while adapting it into simplified Chinese.

To translate the provided English text into simplified Chinese while keeping the HTML structure intact, you can use the following: ```html

编码器的一些关键特性包括:

- 双向:从左和右获取上下文

- 擅长提取有意义的信息

- 序列分类、问答、掩码语言建模

- NLU:自然语言理解

编码器的一些关键特性包括:

- 双向:从左和右获取上下文

- 擅长提取有意义的信息

- 序列分类、问答、掩码语言建模

- NLU:自然语言理解

` tags are used for paragraphs. - `

- ` tags create an ordered list.

- `

- ` tags denote list items.

This structure ensures the translated Chinese text is presented in a clear and organized manner, similar to the original English format.

Certainly! Here is the translated text in simplified Chinese within the HTML structure: ```html

解码器:在变压器模型中,解码器在生成输出序列方面起着关键作用,特别是在机器翻译、文本生成和序列到序列建模等任务中。

```

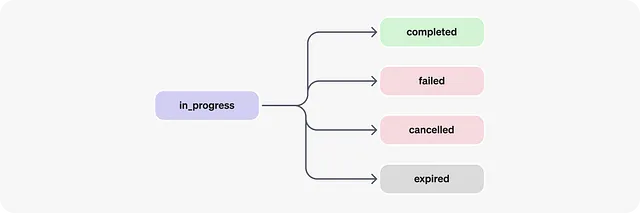

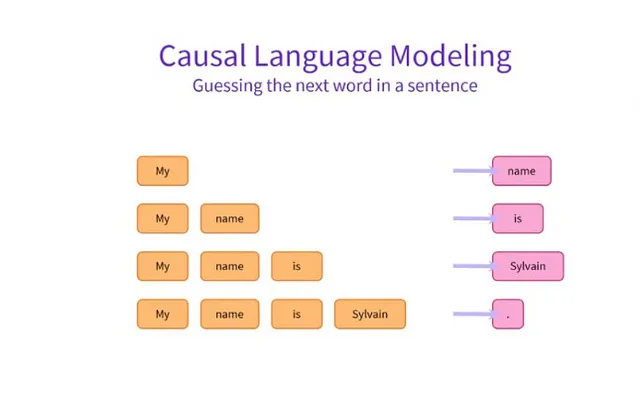

Certainly! Here is the simplified Chinese translation of the provided text: 上图说明了解码器的基础知识:单向性:与编码器不同,解码器是单向的,例如要预测下一个词,解码器只检查前面的向量。“我喜欢

苹果”为了预测掩码词,解码器只会使用前面的词,如“我”、“喜欢”和“去”,即使“苹果”已经出现,解码器也会忽略它。 Sure, here's the translated text in simplified Chinese, keeping the HTML structure: ```html 自回归:自回归解码意味着逐个元素生成输出序列,每个元素都是在先前生成的元素的条件下生成的。该过程从初始输入开始,逐步生成后续标记,直到达到特殊的结束标记或预定义的序列长度为止。 ``` This HTML code structure allows the text to be displayed while maintaining its translated content.

Sure, here's the translation in simplified Chinese: ```html 自注意力和交叉注意力: ```

To translate the given English text into simplified Chinese while keeping the HTML structure intact, you can use the following: ```html

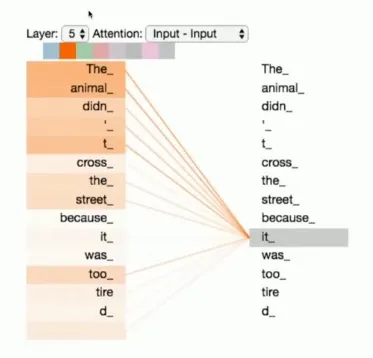

正如我们在编码器中简要讨论的自注意力机制一样,如下图所示,在解码器中,“to”的上下文向量不受“NYC”的影响,这是由于解码器的单向行为。

``` This HTML structure maintains the paragraph `` tag and includes the translated Chinese text within it. Make sure to properly encode the HTML file or document to ensure correct display of Chinese characters.

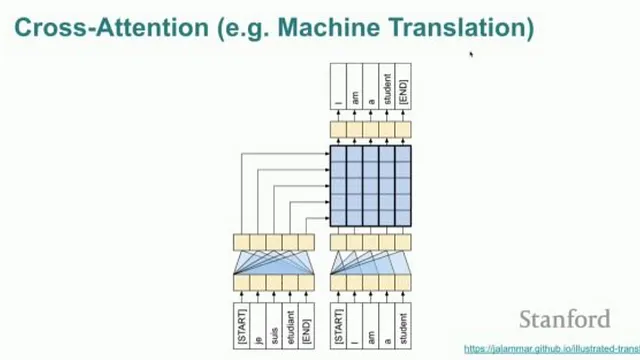

Sure, here is the translated text in simplified Chinese while keeping the HTML structure: ```html 现在让我们讨论交叉注意力,也称为编码器-解码器注意力,在序列到序列模型中用于将输出序列与输入序列对齐的机制。这种机制允许解码器在生成输出序列的每个元素时专注于输入序列的相关部分。 ``` This translation retains the original meaning while adapting it for simplified Chinese readers.

Sure, here's the translated text in simplified Chinese while keeping the HTML structure intact: ``` transformer的解码器从特殊的起始标记

开始,并根据接收到的上下文和信息生成输出句子的每个单词/标记。如上例所示,首先预测出“我的”这个词,现在模型将其映射到所有可能的词来预测下一个词,然后使用概率分布预测下一个标记,这个过程持续进行直到生成结果。 ``` In HTML format: ```html transformer的解码器从特殊的起始标记 <start> 开始,并根据接收到的上下文和信息生成输出句子的每个单词/标记。如上例所示,首先预测出“我的”这个词,现在模型将其映射到所有可能的词来预测下一个词,然后使用概率分布预测下一个标记,这个过程持续进行直到生成结果。 ``` Sure, here's the translated text in simplified Chinese, keeping the HTML structure intact: ```html 这里是解码器的一些关键特性;1. 单向性:可以访问它们的左(或右!)上下文 2. 擅长因果任务;生成序列 3. NLG:自然语言生成 4. 解码器的例子:GPT-2,GPT Neo ``` This HTML structure maintains the list format while providing the text translated into simplified Chinese.

To translate the given English text into simplified Chinese while maintaining the HTML structure, you can use the following: ```html

结论:Transformer 已经彻底改变了自然语言处理,它们处理长距离依赖和并行处理数据的能力使它们在翻译和问答等任务中表现异常出色。随着 Transformer 模型的不断演进,它们将进一步推动人工智能和语言理解的发展。在一篇文章中几乎不可能涵盖关于 Transformer 的所有内容,因此本文仅涵盖了关于 Transformer 工作原理的基本理解和一些术语的介绍。

``` This HTML snippet contains the translated text in simplified Chinese, enclosed in a `` (paragraph) tag to maintain the structure.